Balancing Act: An Efficient Way to Debias Vision-Language Models

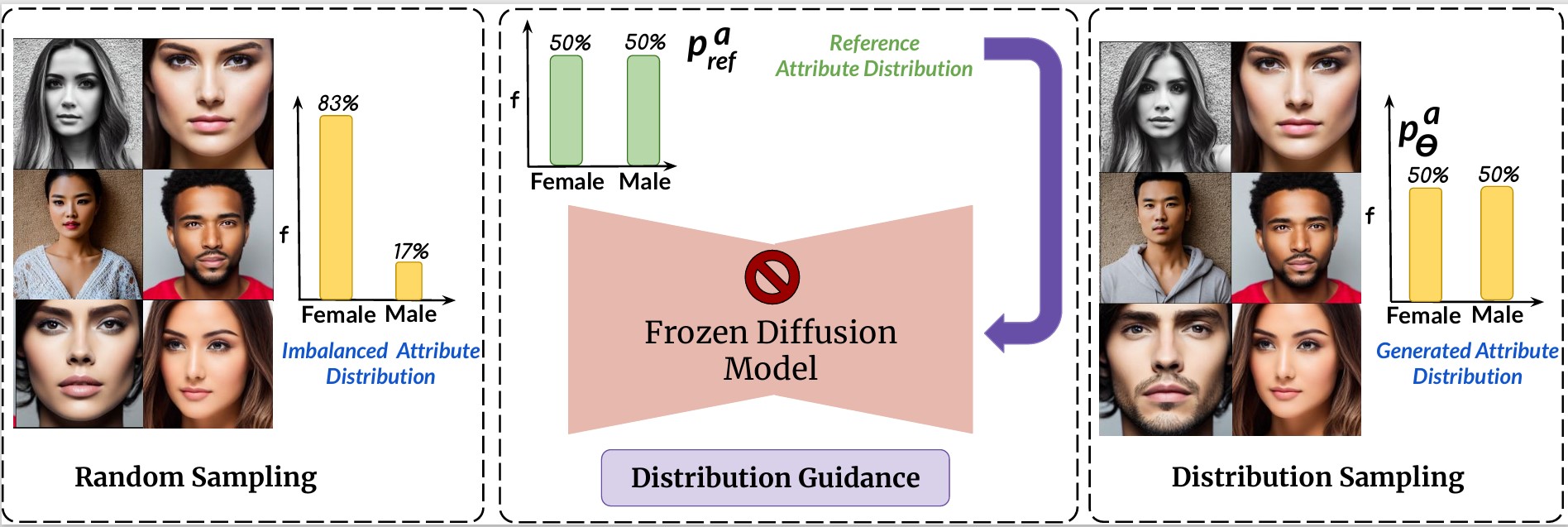

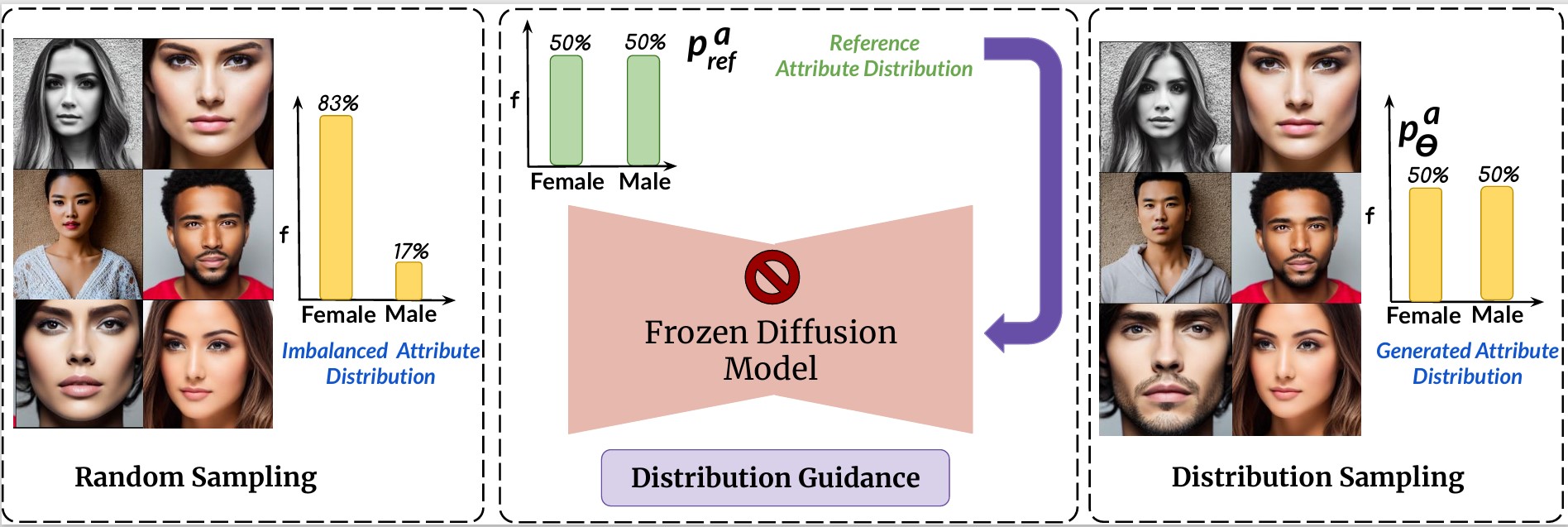

Popular generative models produce impressive images based on text queries, but an important question arises: Are these generative models fair? If not, how can we ensure fairness? Vision-Language Models (VLMs) are trained on vast corpora of vision-text pairs from the internet, inheriting biases present in the training data. This bias is evident in the generated content. Curating a completely unbiased training dataset is impractical because we cannot identify all possible biases. A more practical approach is to control or debias the output during generation. Since diffusion models (DMs) are widely used for data augmentation and creative applications, the biases present in the training datasets can be amplified. This is especially concerning for images of faces, where DMs may prefer one demographic subgroup over others (such as female vs male).

A team of researchers – Rishubh Parihar, Abhijnya Bhat, Abhipsa Basu, Sashwat, and Jogendra – working under Prof. Venkatesh Babu at the Vision and AI Lab (VAL) at CDS, IISc, have proposed a method for debiasing diffusion models without relying on additional data or model retraining. Specifically, they propose Distribution Guidance, which enforces the generated images to follow a prescribed attribute distribution. The key insight is that the latent features of the denoising U-Net contain rich demographic semantics that can be leveraged for guided, unbiased generation. They introduce the Attribute Distribution Predictor (ADP), a small MLP that maps the latent features to the distribution of attributes. ADP is trained with pseudo labels generated from existing attribute classifiers.

The proposed Distribution Guidance with ADP enables fair generation by reducing bias across single and multiple attributes and significantly outperforming the baseline. Additionally, they present a downstream task of training a fair attribute classifier by rebalancing the training set with their generated data.

This work was presented at the IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR), Seattle, WA, USA

Reference:

Rishubh Parihar, Abhijnya Bhat, Abhipsa Basu, Sashwat, Jogendra Nath Kundu, R. Venkatesh Babu, Balancing Act: Distribution-Guided Debiasing in Diffusion Models, in IEEE/CVF Conference on Computer Vision and Pattern Recognition (2024).

Project Page: https://ab-34.github.io/balancing_act/

Paper [pdf]:

https://openaccess.thecvf.com/content/CVPR2024/papers/Parihar_Balancing_Act_Distribution-Guided_Debiasing_in_Diffusion_Models_CVPR_2024_paper.pdf