Robots take cues from dancing bees and human gestures to deliver packages

– Ullas A

Have you ever had to navigate a noisy room in search of a friend? Your eyes scour the room in the hope of catching sight of them, so that you can walk quickly in that direction. Now picture the same thing, but with robots instead of humans. In recent work led by Abhra Roy Chowdhury, Assistant Professor at IISc’s Centre for Product Design and Manufacturing, in collaboration with Kaustubh Joshi at the University of Maryland, robots can now use visual cues, instead of the usual network-based communication, to not only navigate but also deliver a package, with the aid of a human.

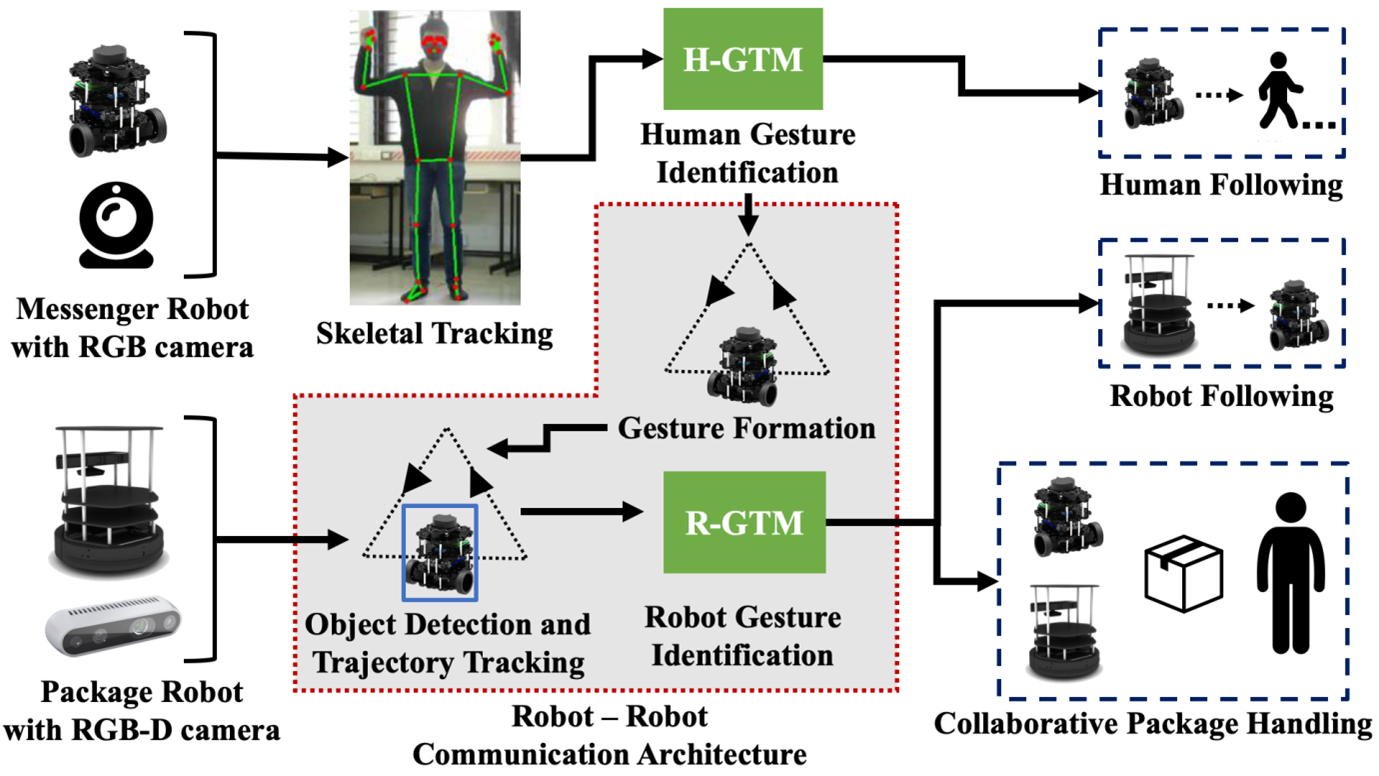

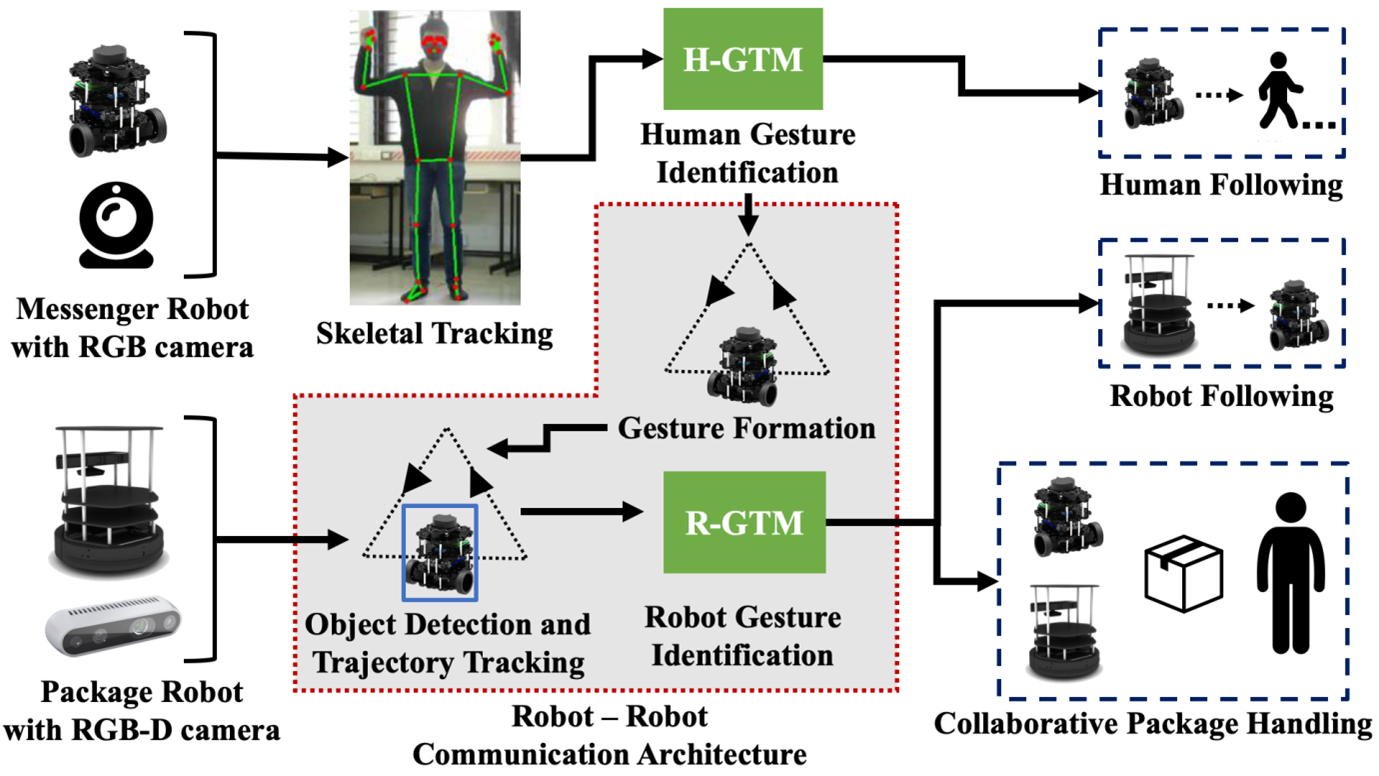

A human first signals a messenger robot via hand gestures about the destination to which a package must be delivered. The messenger robot then signals a package handling robot, by moving along paths in specific geometric shapes, such as a triangle, circle or a square, to communicate the direction and distance towards this destination. This is inspired by the ‘waggle dance’ that bees use to communicate with one another. The robots use an object detection algorithm and depth perception to detect and react to the gestures.

Such interactive robots can be deployed for search and rescue operations in environments that humans can’t foray into, for commercial applications such as package delivery, and in industrial settings where multiple robots talk to one another when communication networks are unreliable.

REFERENCE:

Joshi K, Chowdhury AR, Bio-inspired Vision and Gesture-based Robot-Robot Interaction for Human-Cooperative Package Delivery, Frontiers in Robotics and AI: 179 (2022).

https://doi.org/10.3389/frobt.2022.915884

LAB WEBSITE: https://cpdm.iisc.ac.in/ril/