Alexa, are you ready to join our dinner table conversation!

When listening to an audio podcast or conversing at the dinner table, we “humans” seamlessly parse the audio signal into “who” spoke “when”. Absence of this ability will make multi-talker conversations incomprehensible to the auditory brain. In striking contrast, the current series of conversational agents (or “smart” speakers) are lousy at transcribing multi-talker conversations. These agents will very likely “decline” our invitation to join for a dinner table conversation! How the human brain achieves multi-talker speech segmentation is a mystery for science, and an understanding of the mechanism will provide engineers with ideas which can improve the design of future machine dialogue systems, benefiting human-machine interaction.

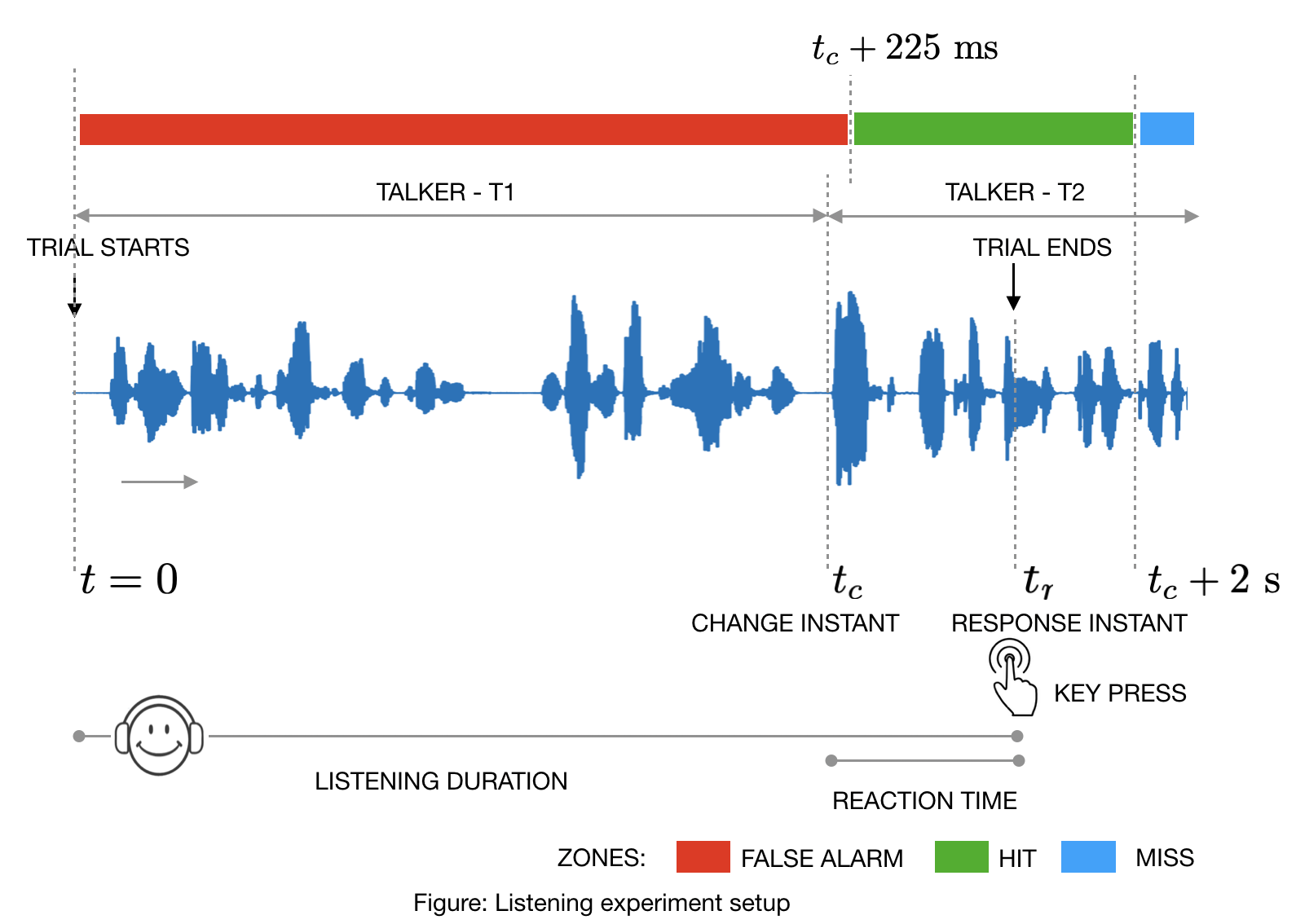

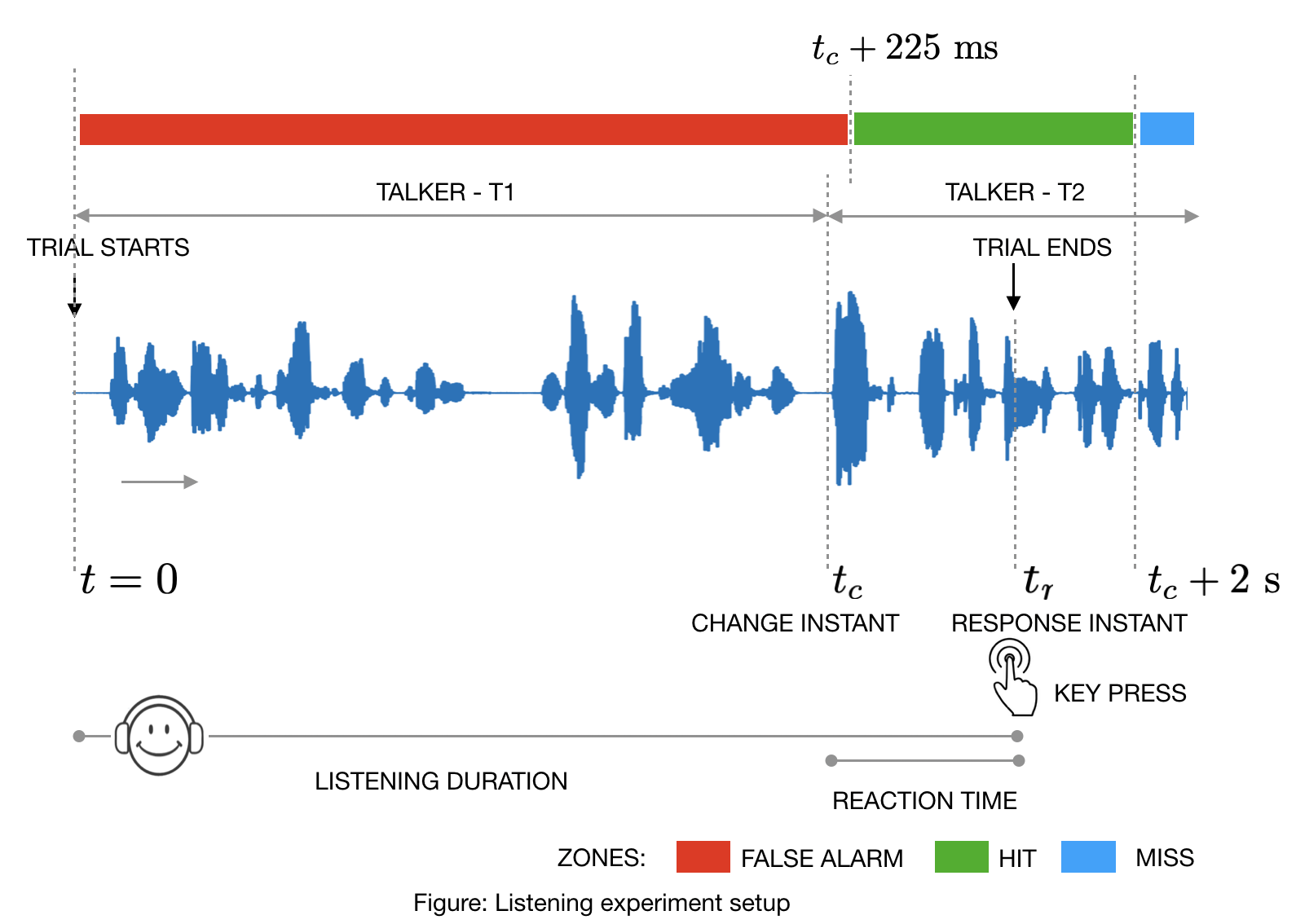

Recently, a study published in The Journal of the Acoustical Society of America explored talker perception by analyzing human reaction time to detect talker changes. In the study, the researchers designed an experiment in which a group of human volunteers listened to audio clips of two similar voices speaking in turn, and were asked to identify the exact moment one talker took over from the previous one. This allowed the researchers to explore the relationship between certain acoustic features and the reaction time of the human subjects in the experiment. The authors began to decipher what cues humans paid attention to when they made a choice of talker change. The study featured a performance comparison with machines, and pinpointed a large performance gap (with humans having a significant edge over speech systems from IBM). This study has been awarded the “Technical Area Pick for Speech Communication, 2019” by the journal. The features that humans use in talker perception discovered in the study may spur the development of the next-generation Alexa like conversational agents that have the capability to join a business meeting or find a seat at our home dinner party.

From Left-to-Right Neeraj Sharma, Shobhana Ganesh, Sriram Ganapathy, Lori Holt.

The research involved a fruitful collaboration between the Indian Institute of Science and Carnegie Mellon University (CMU), Pittsburgh (USA), and was funded by the generous support provided by Kris Gopalakrishnan to the Indian Institute of Science through the Pratiksha Trust, the CMU-IISc BrainHub Postdoctoral Fellowship, and the Carnegie Mellon Neuroscience Institute.

Labs Involved : – LEAP lab (EE, IISc), Holt lab (CMU)

The published article : Sharma, N.K., Ganesh, S., Ganapathy, S., & Holt, L.L., “Talker change detection: A comparison of human and machine performance”, Journal of the Acoustical Society of America, 145, 131-142 (2019). https://asa.scitation.org/doi/10.1121/1.5084044

Additional media coverage : click here

website URL : http://leap.ee.iisc.ac.in/