Making AI to explain its actions

Machine Learning and Artificial Intelligence (AI) have already started to impact many aspects of our lives ranging from machine translation, medical diagnosis to autonomous driving. The recent success of AI in various domains is hugely attributed to the advancements in Deep Learning research. However, in the current state, most of the sophisticated AI systems, particularly those powered by the Deep Learning, suffer from being ‘Black Boxes’, a metaphor indicating the opaque nature of its inner mechanisms. This means, even the designers can not explain why the Deep Learning model has arrived at a specific decision. Further, the opaqueness of the system gets worse with complexity of the model used and hinders deployment in sensitive domains such as health care and autonomous driving. On the other hand, we, as the end users need to develop transparent AI systems whose actions can be trusted and easily understood by humans. The explainable transparent AI systems are essential to develop robust and trustworthy models that can overcome various fooling attacks.

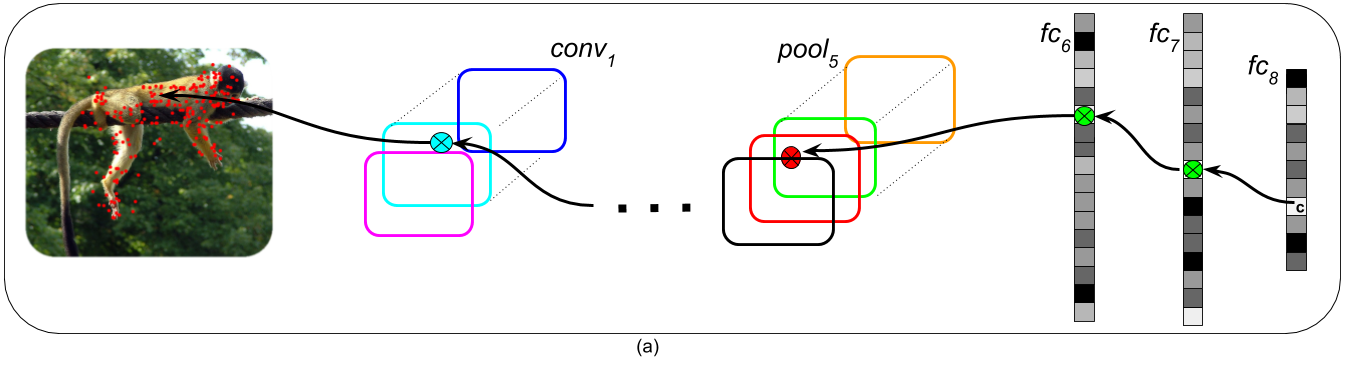

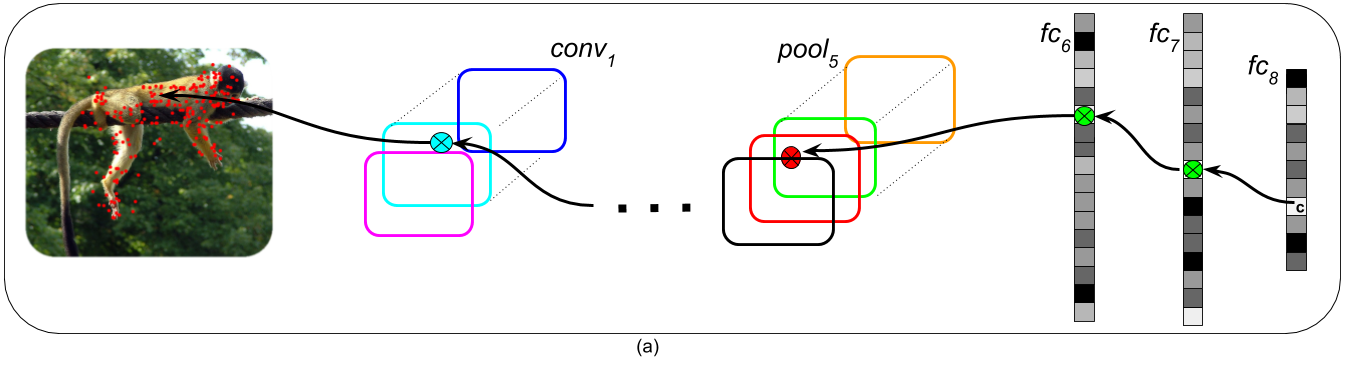

In order to address the above issue, recently the Video Analytics Lab (http://val.cds.iisc.ac.in/) members Konda Reddy, Utsav and Venkatesh Babu, at the Department of Computational and Data Sciences, IISc have developed an approach called “CNN fixations” to explain the Deep Learning models’ predictions in term of visual explanations. In other words, given an image, the AI system not only recognizes the presence of an object but it also indicates the image regions that triggered the AI system for its prediction. This simple feedback sort of communication can make the AI systems explainable and can lead to better understanding of the existing models and development of superior trustworthy future models. Our approach is a generic and an off-the-shelf approach which can make various deep neural network architectures including the recurrent varieties explainable. With the widespread popularity of Deep models, more efforts should be dedicated towards making it reliable via making them explainable.

Reference:

Mopuri Reddy, Utsav Garg and R. Venkatesh Babu, “CNN Fixations: An unraveling approach to visualize the discriminative image regions”, in IEEE Trans. on Image Processing, 2019. DOI: 10.1109/TIP.2018.2881920

Project Page: https://github.com/val-iisc/cnn-fixations

GitHub – val-iisc/cnn-fixations: Visualising predictions of deep neural networks

github.com

CNN-Fixations. Code for the paper CNN fixations: An unravelling approach to identify discriminative image regions Konda Reddy Mopuri, Utsav Garg, R. Venkatesh Babu. This repository can be used to visualize predictions for four CNNs namely: AlexNet, VGG-16, GoogLeNet and ResNet-101.