– Sangeetha Devi Kumar

A new study from the Centre for Neuroscience (CNS) at the Indian Institute of Science (IISc) explores how well deep neural networks compare to the human brain when it comes to visual perception.

Deep neural networks are machine learning systems inspired by the network of brain cells or neurons in the human brain, which can be trained to perform specific tasks. These networks have played a pivotal role in helping scientists understand how our brains perceive the things that we see. Although deep networks have evolved significantly over the past decade, they are still nowhere close to performing as well as the human brain in perceiving visual cues. In a recent study, SP Arun, Associate Professor at CNS, and his team have compared various qualitative properties of these deep networks with those of the human brain.

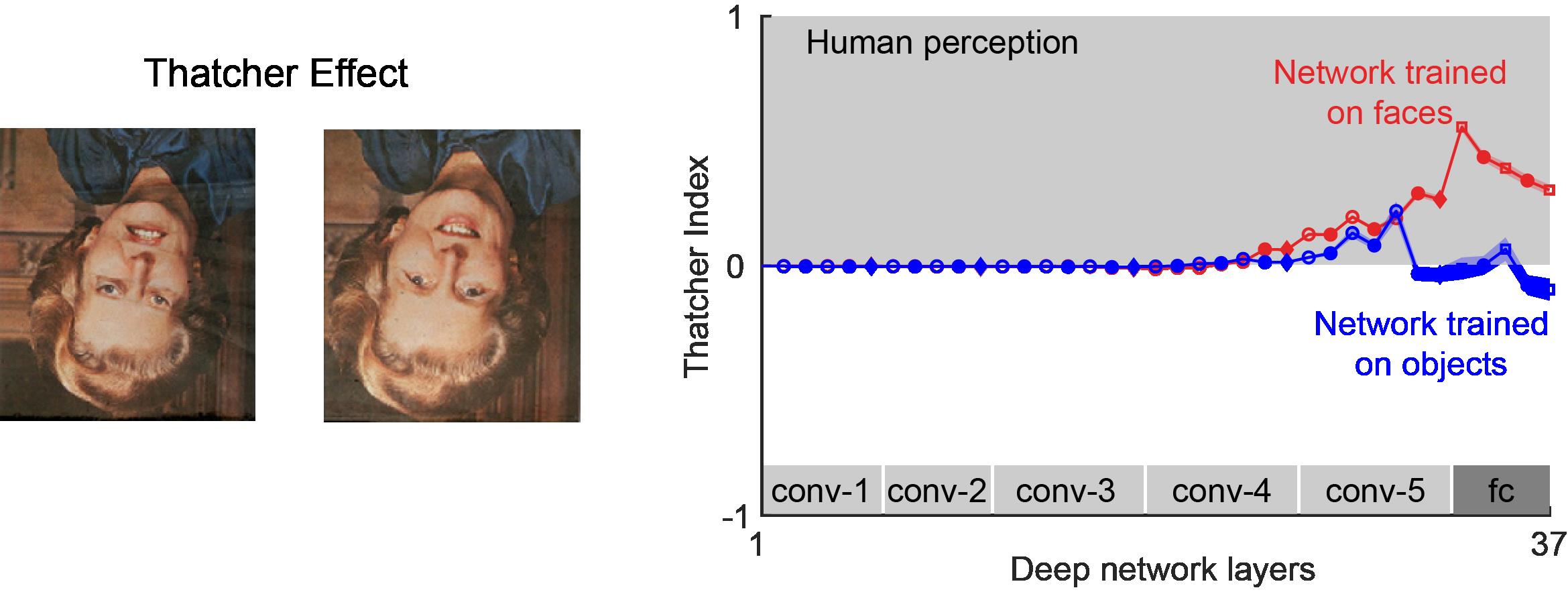

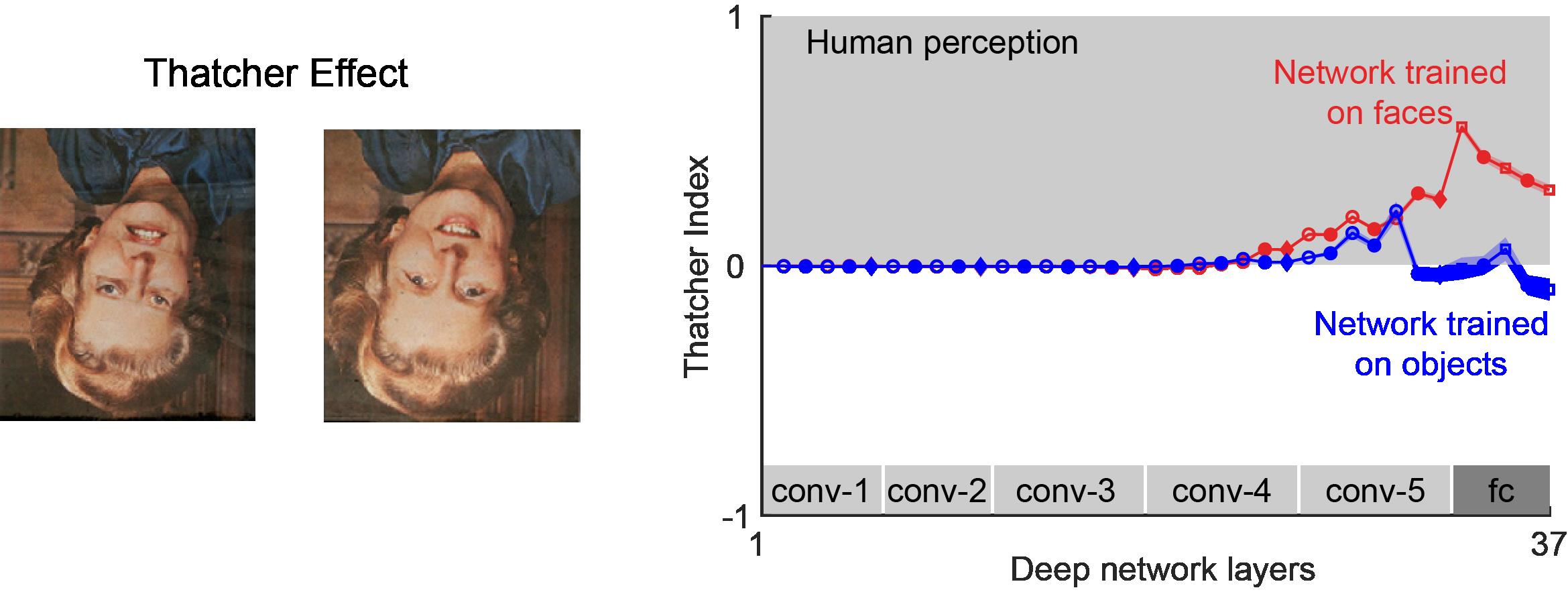

In the Thatcher Effect (left), two inverted versions of Margaret Thatcher look deceptively similar, but look dramatically different if you rotate this page upside-down. By comparing the distance between such upright and inverted faces in deep networks, the authors were able to track whether the Thatcher effect arises in deep networks trained on objects or in deep networks trained on faces. Figure adapted from Jacob et al., 2021.

Deep networks, although a good model for understanding how the human brain visualises objects, work differently from the latter. While complex computation is trivial for them, certain tasks that are relatively easy for humans can be difficult for these networks to complete. In the current study, published in Nature Communications, Arun and his team attempted to understand which visual tasks can be performed by these networks naturally by virtue of their architecture, and which require further training.

The team studied 13 different perceptual effects and uncovered previously unknown qualitative differences between deep networks and the human brain. An example is the Thatcher effect, a phenomenon where humans find it easier to recognise local feature changes in an upright image, but this becomes difficult when the image is flipped upside-down. Deep networks trained to recognise upright faces showed a Thatcher effect when compared with networks trained to recognise objects. Another visual property of the human brain, called mirror confusion, was tested on these networks. To humans, mirror reflections along the vertical axis appear more similar than those along the horizontal axis. The researchers found that deep networks also show stronger mirror confusion for vertical compared to horizontally reflected images.

Another phenomenon peculiar to the human brain is that it focuses on coarser details first. This is known as the global advantage effect. For example, in an image of a tree, our brain would first see the tree as a whole before noticing the details of the leaves in it. Similarly, when presented with an image of a face, humans first look at the face as a whole, and then focus on finer details like the eyes, nose, mouth and so on, explains Georgin Jacob, first author and PhD student at CNS. “Surprisingly, neural networks showed a local advantage,” he says. This means that unlike the brain, the networks focus on the finer details of an image first. Therefore, even though these neural networks and the human brain carry out the same object recognition tasks, the steps followed by the two are very different.

“Lots of studies have been showing similarities between deep networks and brains, but no one has really looked at systematic differences,” says Arun, who is the senior author of the study. Identifying these differences can push us closer to making these networks more brain-like.

Such analyses can help researchers build more robust neural networks that not only perform better but are also immune to “adversarial attacks” that aim to derail them.

REFERENCE:

Jacob, RT Pramod, Harish Katti, SP Arun (2021), Qualitative similarities and differences in visual object representations between brains and deep networks, Nature Communications, 12, 1872.

https://doi.org/10.1038/s41467-021-22078-3

CONTACT:

SP Arun

Associate Professor

Centre for Neuroscience

Indian Institute of Science

sparun@iisc.ac.in

+91 80 2293 3436/3431

Vision Lab Website

NOTE TO JOURNALISTS:

a) If any of the text in this release is reproduced verbatim, please credit the IISc press release.

b) For any queries about IISc press releases, please write to news@iisc.ac.in OR pro@iisc.ac.in