In a new study, researchers at the Indian Institute of Science (IISc) show how a brain-inspired image sensor can go beyond the diffraction limit of light to detect miniscule objects such as cellular components or nanoparticles invisible to current microscopes. Their novel technique, which combines optical microscopy with a neuromorphic camera and machine learning algorithms, presents a major step forward in pinpointing objects smaller than 50 nanometers in size. The results are published in Nature Nanotechnology.

Since the invention of optical microscopes, scientists have strived to surpass a barrier called the diffraction limit, which means that the microscope cannot distinguish between two objects if they are smaller than a certain size (typically 200-300 nanometers). Their efforts have largely focused on either modifying the molecules being imaged, or developing better illumination strategies – some of which led to the 2014 Nobel Prize in Chemistry. “But very few have actually tried to use the detector itself to try and surpass this detection limit,” says Deepak Nair, Associate Professor at the Centre for Neuroscience (CNS), IISc, and corresponding author of the study.

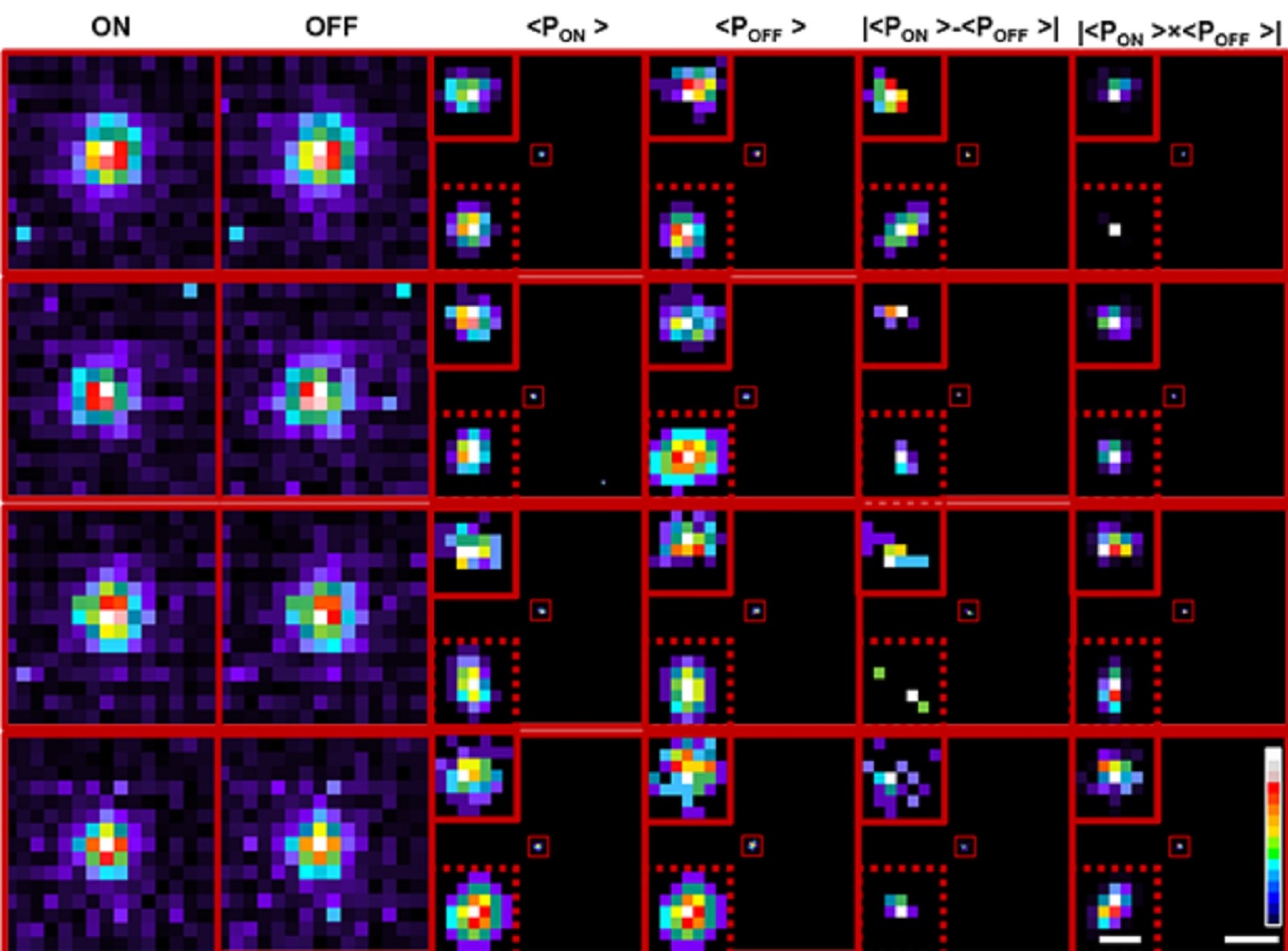

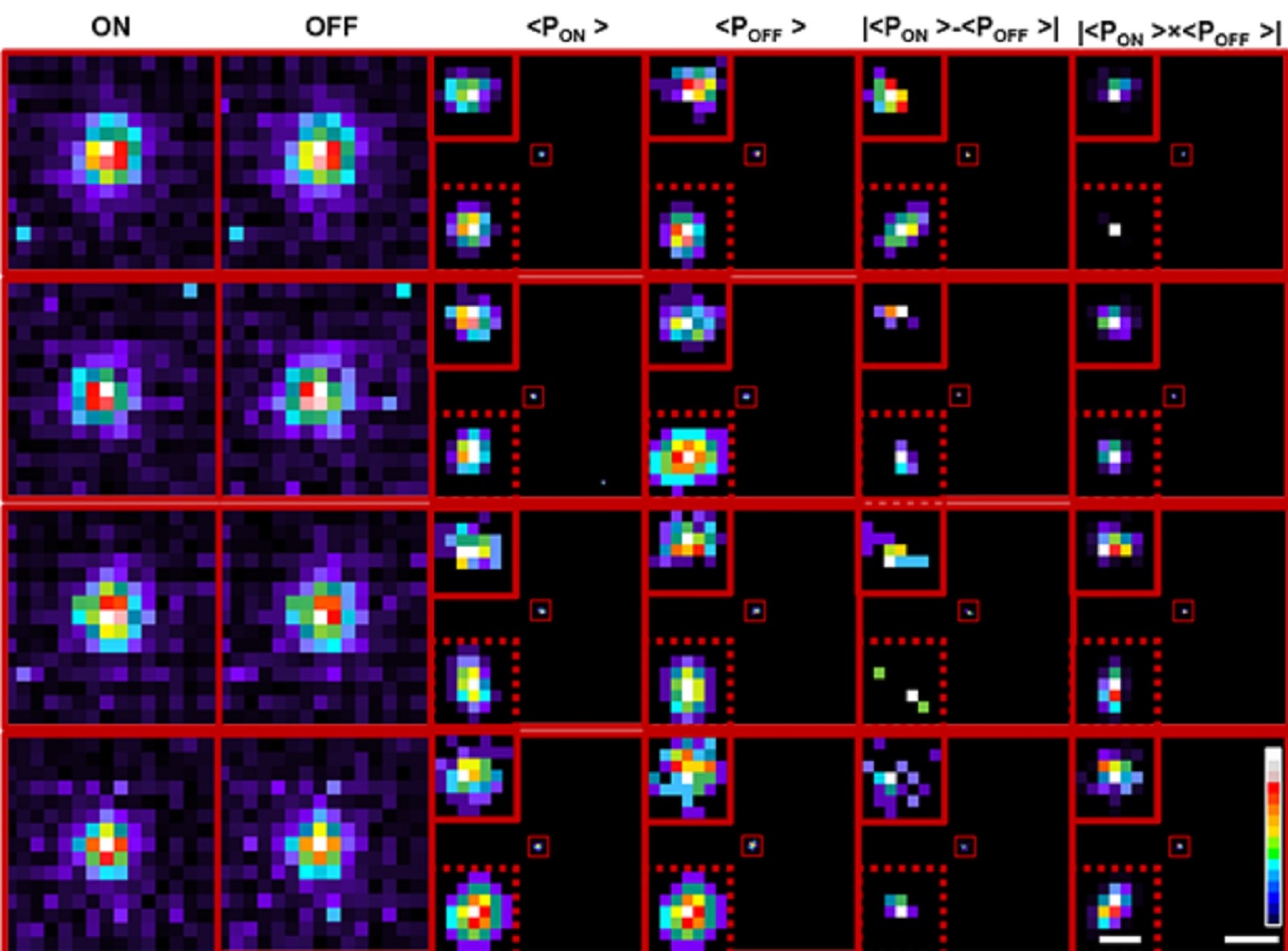

Transformation of cumulative probability density of ON and OFF processes allows localisation below the limit of classical single particle detection (Credit: Mangalwedhekar et al, 2023)

Measuring roughly 40 mm (height) by 60 mm (width) by 25 mm (diameter), and weighing about 100 grams, the neuromorphic camera used in the study mimics the way the human retina converts light into electrical impulses, and has several advantages over conventional cameras. In a typical camera, each pixel captures the intensity of light falling on it for the entire exposure time that the camera focuses on the object, and all these pixels are pooled together to reconstruct an image of the object. In neuromorphic cameras, each pixel operates independently and asynchronously, generating events or spikes only when there is a change in the intensity of light falling on that pixel. This generates sparse and lower amount of data compared to traditional cameras, which capture every pixel value at a fixed rate, regardless of whether there is any change in the scene. This functioning of a neuromorphic camera is similar to how the human retina works, and allows the camera to “sample” the environment with much higher temporal resolution – because it is not limited by a frame rate like normal cameras – and also perform background suppression.

“Such neuromorphic cameras have a very high dynamic range (>120 dB), which means that you can go from a very low-light environment to very high-light conditions. The combination of the asynchronous nature, high dynamic range, sparse data, and high temporal resolution of neuromorphic cameras make them well-suited for use in neuromorphic microscopy,” explains Chetan Singh Thakur, Assistant Professor at the Department of Electronic Systems Engineering (DESE), IISc, and co-author.

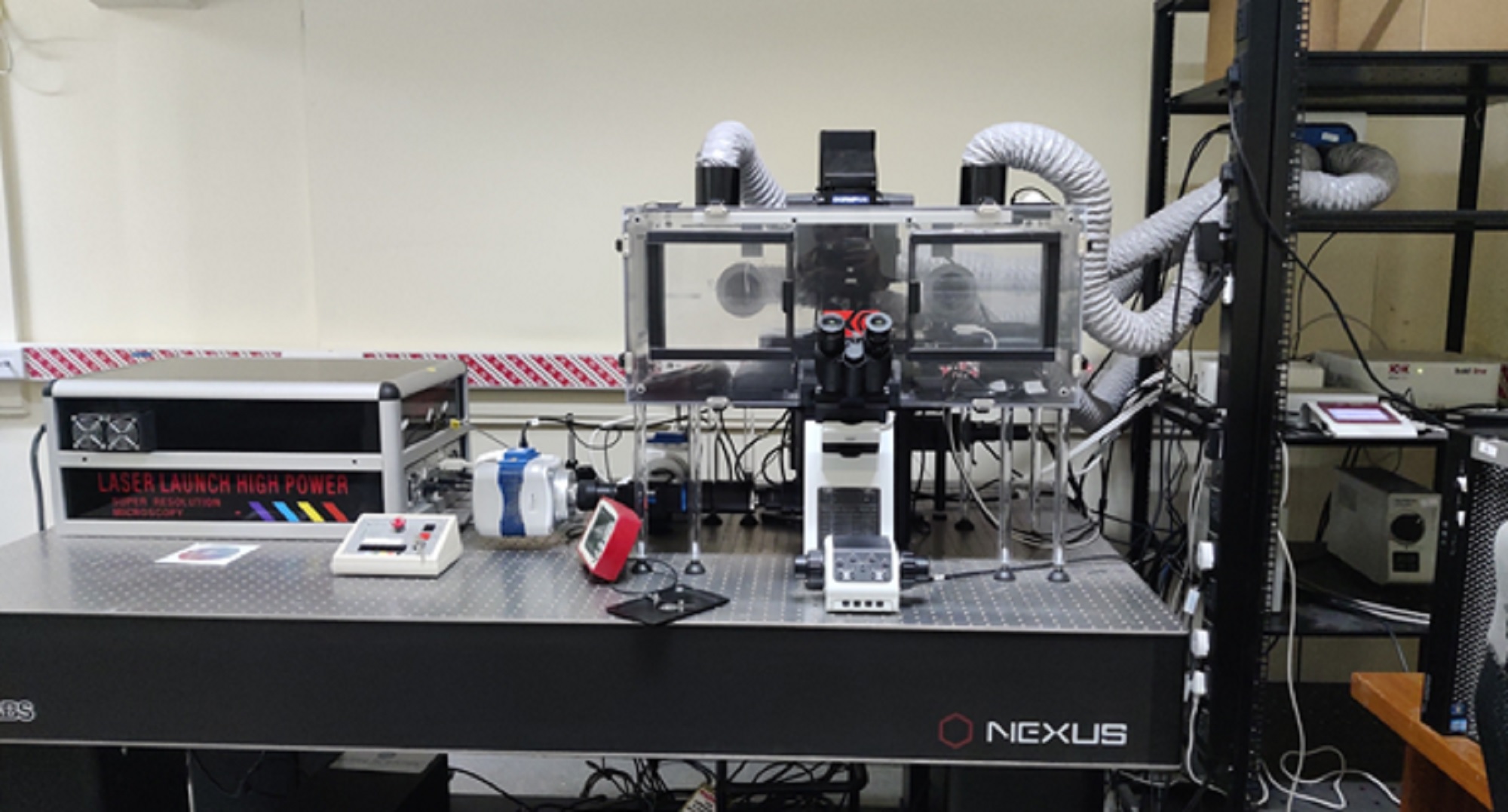

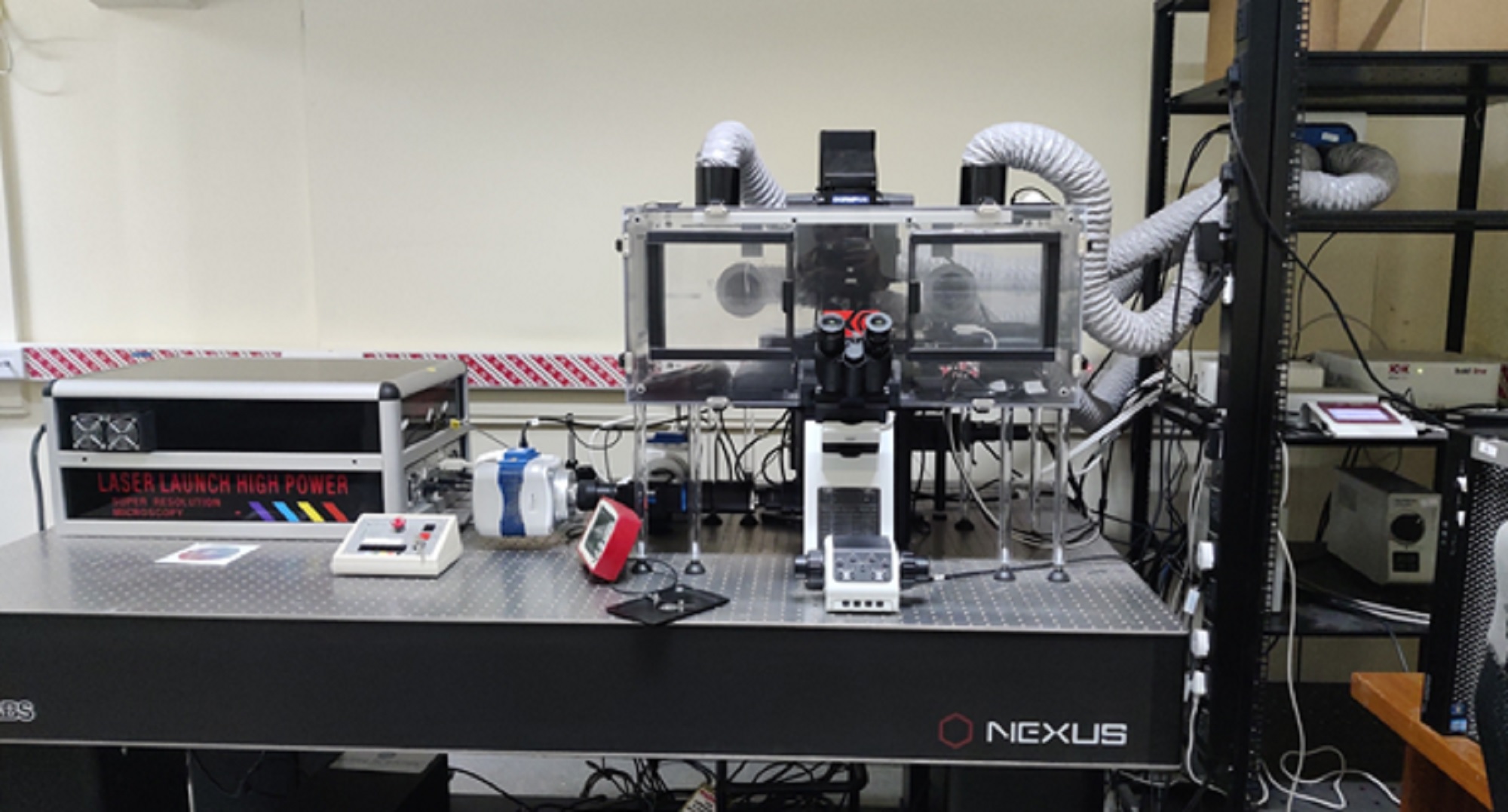

View of the microscopy setup (Credit: Rohit Mangalwedhekar)

In the current study, the group used their neuromorphic camera to pinpoint individual fluorescent beads smaller than the limit of diffraction, by shining laser pulses at both high and low intensities, and measuring the variation in the fluorescence levels. As the intensity increases, the camera captures the signal as an “ON” event, while an “OFF” event is reported when the light intensity decreases. The data from these events were pooled together to reconstruct frames.

To accurately locate the fluorescent particles within the frames, the team used two methods. The first was a deep learning algorithm, trained on about one and a half million image simulations that closely represented the experimental data, to predict where the centroid of the object could be, explains Rohit Mangalwedhekar, former research intern at CNS and first author of the study. A wavelet segmentation algorithm was also used to determine the centroids of the particles separately for the ON and the OFF events. Combining the predictions from both allowed the team to zero in on the object’s precise location with greater accuracy than existing techniques.

“In biological processes like self-organisation, you have molecules that are alternating between random or directed movement, or that are immobilised,” explains Nair. “Therefore, you need to have the ability to locate the centre of this molecule with the highest precision possible so that we can understand the thumb rules that allow the self-organisation.” The team was able to closely track the movement of a fluorescent bead moving freely in an aqueous solution using this technique. This approach can, therefore, have widespread applications in precisely tracking and understanding stochastic processes in biology, chemistry and physics.

REFERENCE:

Mangalwedhekar R, Singh N, Thakur CS, Seelamantula CS, Jose M, Nair D, Achieving nanoscale precision using neuromorphic localization microscopy, Nature Nanotechnology (2023).

https://www.nature.com/articles/s41565-022-01291-1

CONTACT:

Deepak Nair

Associate Professor

Centre for Neuroscience (CNS)

Indian Institute of Science (IISc)

Email: deepak@iisc.ac.in

Phone: 080-22933535

Website: https://cns.iisc.ac.in/deepak/index.html

Chetan Singh Thakur

Assistant Professor

Department of Electronic Systems Engineering (DESE)

Indian Institute of Science (IISc)

Email: csthakur@iisc.ac.in

Phone: 080-22933608

Website: https://labs.dese.iisc.ac.in/neuronics/

IMAGE CAPTIONS AND CREDITS:

Image 1: Transformation of cumulative probability density of ON and OFF processes allows localisation below the limit of classical single particle detection (Credit: Mangalwedhekar et al, 2023)

Image 2: View of the microscopy setup (Credit: Rohit Mangalwedhekar)

NOTE TO JOURNALISTS:

a) If any of the text in this release is reproduced verbatim, please credit the IISc press release.

b) For any queries about IISc press releases, please write to news@iisc.ac.in or pro@iisc.ac.in.