Where Deep Networks fail, and how we can save

Deep Neural Networks have achieved outstanding success in solving almost any task that we can think of; be it Alexa in our homes, self-driving cars, or AlphaGo beating humans. We are currently in an era that has witnessed progress at a pace faster than ever, leading to algorithms which progressively blur the line between human and machine performance. But how secure are these Deep Networks? How can we protect these systems from hackers who try to attack them? How do we evaluate the worst-case performance of such models before deploying them in critical applications such as autonomous navigation systems?

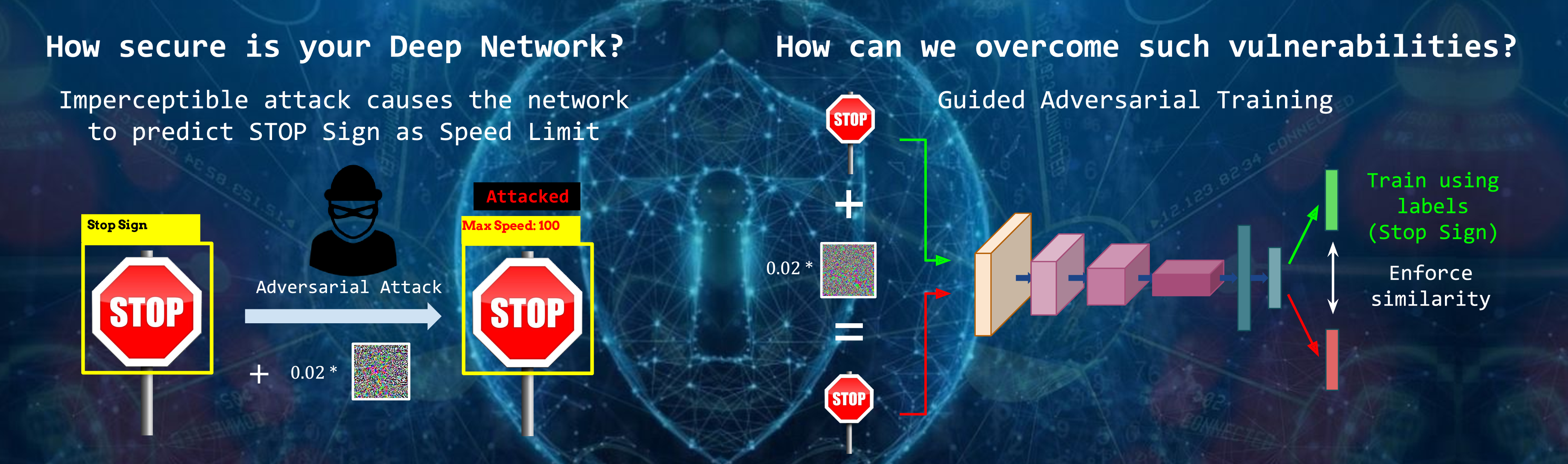

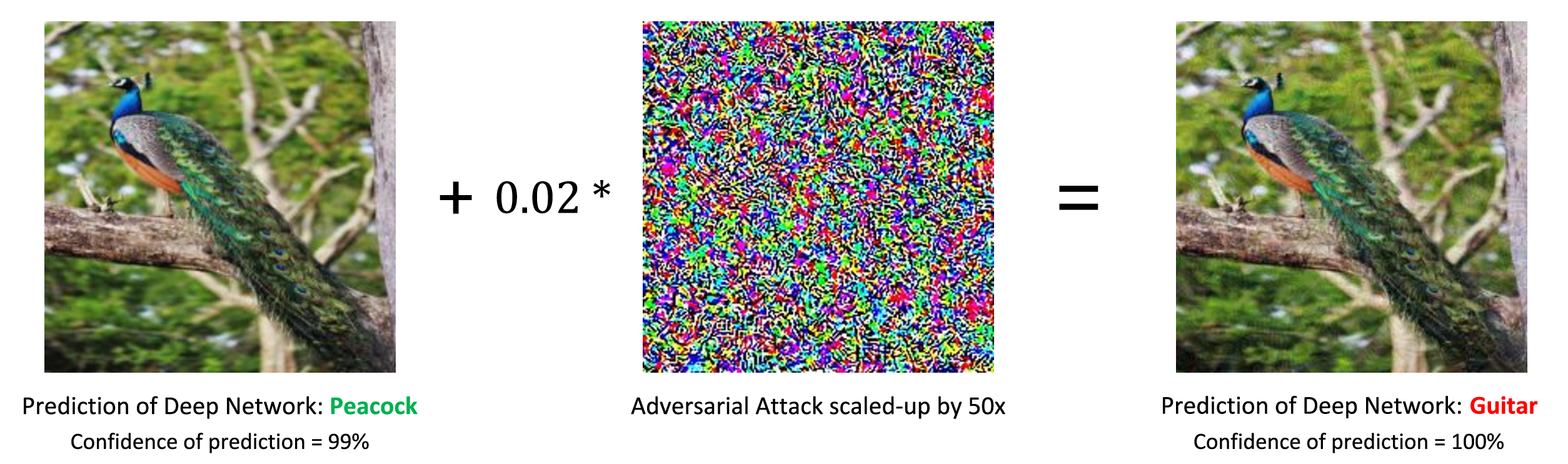

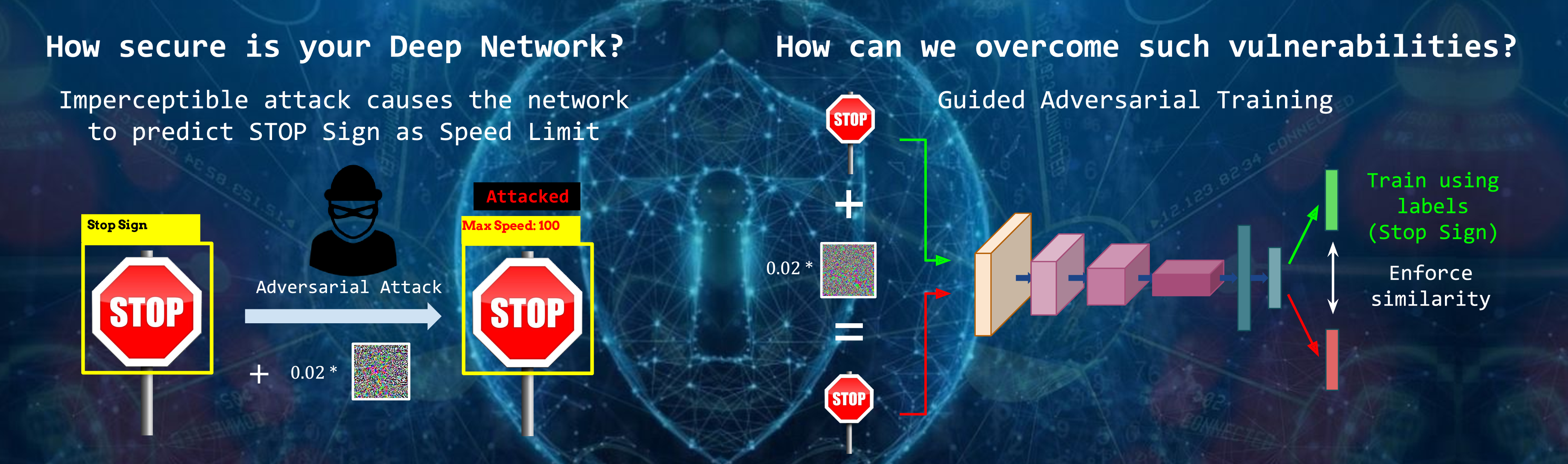

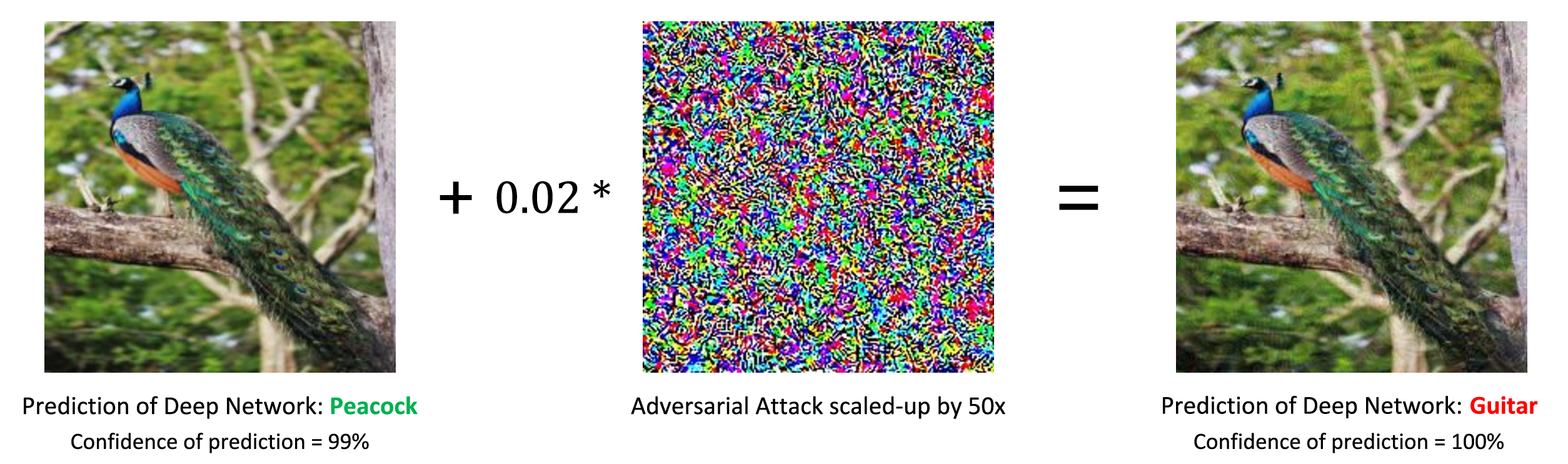

Deep Neural Networks can be easily fooled using seemingly invisible noise known as Adversarial Attacks. As shown in the figure, although humans cannot distinguish between the normal and attacked image of a peacock, such adversarial attacks can lead the network to predict them as completely unrelated classes (such as guitar) with very high confidence.

An attacker can potentially fool a self-driving car to read a stop sign as yield, and a speed limit of 35 as 85, leading to disastrous consequences. A group of researchers (Gaurang Sriramanan, Sravanti Addepalli, Arya Baburaj and Prof. R. Venkatesh Babu) from the Video Analytics Lab at the Department of Computational and Data Sciences (CDS) have been working towards building attacks for identifying these weaknesses more effectively, and developing defenses to safeguard these models from adversaries. Their recent work presented at NeurIPS 2020 demonstrates a novel attack, GAMA (Guided Adversarial Margin Attack), which reliably estimates the worst-case performance of Deep Networks, and a novel single-step adversarial defense, GAT (Guided Adversarial Training) which utilises the proposed Guided Adversarial Attack to efficiently train robust models.

More details:

Video

Code

Paper

Reference:

“Guided Adversarial Attack for Evaluating and Enhancing Adversarial Defenses”, Gaurang Sriramanan, Sravanti Addepalli, Arya Baburaj and R. Venkatesh Babu, in Proceedings of the Advances in Neural Information Processing Systems (NeurIPS) 2020

Authors: